Paradigm Shifts in the History of Medicine

Medical theory and practice have undergone many waves of change over the centuries, with many changes to come. Here, I explore paradigm shifts in microbiology as a part of medicine.

The history of medical advancement is marked by bursts of revolutionary changes in how humans have changed their perceptions of the natural world. In many cases, these changes were noted with the emergence of new theories and the annulment of others. Everything from disease causation to therapeutic strategies were points of debate and change. Were disease symptoms due to spiritual afflictions, a failure to appease the gods or even the result of being targeted by evil magic? Or were they due to imbalances in the bodily humors, injury, or of some natural causation? Were certain foods, exercise, prayers, or rest the best therapies? These are some of the questions people have asked over the centuries and in some cases, continue to ask today. The cultural framework of the medical domain greatly influences these perspectives, and it is no wonder that strong opinions and perspectives on science and medicine have varied across cultures and time. No field of medicine quite illustrates this point as well as that of infectious disease.

Germ Theory

Although we currently live in an era of broad acceptance of the germ theory of disease—the concept that microorganisms can invade other microorganisms and cause disease—this was actually a revolutionary concept that took centuries of experiments and observations until it was accepted. For example, while people could observe food spoilage in the home environment, there was little understanding of the process. This is most evident in the theory of spontaneous generation—the idea that living things (such as microbes and worms) arose from nonliving things (such as a cut of meat).

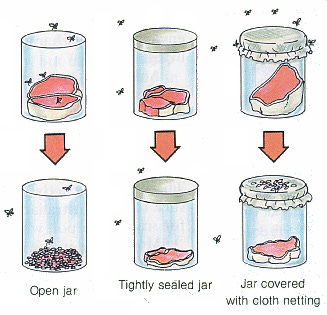

Firmly held belief in spontaneous generation hampered advancement in the understanding of the transmission of infectious disease for centuries, and this is despite the efforts of several talented scientists who worked to disprove it. For example, in the late 17th Century, the Italian physician Francesco Redi tested this idea with an experiment in which pieces of meat were placed in jars either left open, covered in gauze, or closed with a cork. Flies laid eggs on the meat in the open jar resulting in maggots on the meat, but not maggots were observed in the meat from the closed cork jar or the gauze-covered jar. This simple yet elegant experiment should have put the argument to rest. Still, additional tests went on until the 19th Century by Louis Pasteur and John Tyndall, whose experiments with sterile broth exposed to air eventually resolved the matter.

However, evidence of careful observations regarding disease transmissibility does appear much earlier than this period. For example, Biblical laws regarding basic sanitation and the necessity of burying solid waste (fecal materials) have been in place at least since the time of Moses (circa 13th Century BCE). The Hippocratic Corpus (c400 BCE) describes several sanitary and surgical procedures that indicate an understanding that disease could transfer from person to person through inanimate objects, such as clothing. Varro (116-27 BCE), the great Roman scholar and polymath, proposed that tiny animals entered the body through the mouth and nose to cause disease—a revolutionary concept at the time.

Miasma theory was introduced by Galen (129-216 CE) and expanded upon the humoral theory of the Hippocratic Corpus. The miasma theory of contagion proposed that infectious diseases such as cholera and the Black Death (plague) were caused by exposure to a noxious form of air, or miasma, which emerged from decomposing organic matter, and was especially linked to the night air. This concept was prominent from the Middle Ages through the mid-1800s, and while this was one small step closer to germ theory, it didn’t recognize the role of microbes, nor the role of specific microbes in disease causation and spread. In the Cannon of Medicine (1025 CE), Avicenna elaborated on the miasma theory of contagion by drawing links concerning disease transmission between people, through the breath of sick people (linked to symptoms of tuberculosis), and even through water and dirt.

A Leap in Technology: The Microscope is Invented

While it may be hard to believe that it took nearly two thousand years before Varro’s concept of tiny animals being responsible for certain diseases to be developed into germ theory, this is what happened. Much of the delay was due to a lack of technology enabling humans to see these tiny organisms with their own eyes. This required technological innovations like the microscope.

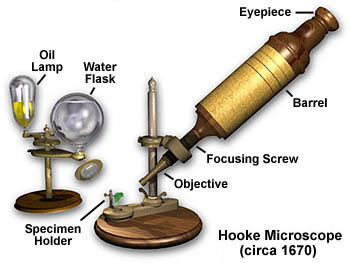

Robert Hooke (1635-1703) refined the design of the compound microscope and was responsible for coining the name “cell” to represent this most basic unit of life in his book Micrographia (1665 CE). As he gazed into his microscope at a slice of cork, he was reminded of the rooms (monastic cells) where monks lived, hence inspiring this name. You can see a digital version of the 358-year-old book, Micrographia, on the British Library’s website here.

As a master craftsman of glass lenses, Antonie van Leeuwenhoek (1632-1723 CE) made single-lensed microscopes and was the first to begin exploring the world of what he referred to as “small animals”, known today as microorganisms. The Dutch businessman and self-taught scientist was the first person to ever observe and document microbial life forms. He was also the first to see red blood cells, spermatozoa, muscle fibers, bacteria, and more—opening up a whole new field of scientific inquiry. For this reason, he is known as the “Father of Microbiology”.

Learning about the Links Between Microbes and Disease

Although the paradigm shift of recognizing that microbial life abounds was certainly transformational, this did not necessarily take hold in the field of medicine. This required an additional two centuries of research and observation by scientists and physicians. In his work with the diseases of silkworms, French scientist Louis Pasteur (1822-1895 CE) made groundbreaking advancements in developing the association of specific microbes with specific diseases.

Around this same time, his German contemporary, Robert Koch (1843-1910 CE) developed methods to grow bacteria in pure culture (not contaminated with other species), enabling experiments with microbes and animals that led to the development of four postulates (known as Koch’s postulates):

The specific causative agent must be found in every case of the disease;

The disease organism must be isolated in pure culture;

Inoculations of a sample of the culture into a healthy, susceptible animal must produce the same disease;

The disease organism must be recovered from the inoculated animal.

Microbes and Infection in the Medical Clinic

In hospitals across Europe, however, most physicians weren’t yet aware of the implications of these findings or simply didn’t see the relevance to their clinical practice. Even before these findings were published, the Hungarian physician scientist Ignaz Semmelweis (1818-1865 CE) was making his own observations in the clinic. While working in a Vienna obstetrical clinic, he became particularly concerned with puerperal fever the often fatal disease also known as “childbed fever”, among women in childbirth.

Women assisted by physicians died of the disease at three times the rate of those assisted by midwives. A rationale for this difference in mortality came in 1847 when he drew a link between post-mortem exams, or autopsies, that the physicians were conducting on dead mothers and the spread of new disease. Hand washing was not a commonly recommended medical practice at the time. The doctors were infecting healthy women in childbirth with the infected materials from women who had died from the disease. Semmelweis advocated for a revolutionary practice of washing the hands with a solution of calcium hypochlorite (chlorine) between autopsies and patient examinations, and this resulted in significant reductions in deaths due to purpureal fever in his clinic patients.

However “normal” the practice of handwashing is today, especially in a hospital setting—this was not the case at the time—and his opinions strongly conflicted with those of his peers and the medical establishment. Other doctors not only rejected these ideas, but were also offended at the concept that their hands could be responsible for the deaths of their patients. By 1861, Semmelweis suffered from severe depression and may have suffered from neurodegenerative disease. He was placed in a mental institution in 1865, where he tragically died soon after being beaten by several guards and confined to a straightjacket in a dark cell.

In Britain, the surgeon Joseph Lister (1827-1912 CE) was also facing pushback on his ideas concerning antiseptics. His interests concerned infection in wounds, and through applying Pasteur’s advances in microbiology and learning of the use of creosote (a byproduct of coal tar) in treating sewage, he explored the use of another coal tar product, carbolic acid (also known as phenol) as a chemical antiseptic agent. Through systemic tests on medical instruments, wound sites, and his own hands, he found that the use of carbolic acid solution greatly reduced the incidence of wound infections like gangrene. This shift towards aseptic surgical technique earned him the distinction as the “father of modern surgery”.

Microbes that Heal

Thirty years after Lister’s death, a Scottish microbiologist made a curious observation of a zone of clearance where a speck of mold had settled onto a petri dish full of bacteria. Alexander Fleming’s (1881-1955 CE) observation of the activity of Penicillium notatum and the subsequent years of research undertaken by Fleming, Howard Florey, and Ernst Chain would lead to the Nobel Prize in Physiology or Medicine to the trio for the game-changing discovery of penicillin, opening the gates to the Golden Era of antibiotic discovery. This marked a major shift in the survival of patients afflicted with infections ranging from puerperal fever to syphilis, strep throat, gangrene, and more. It also coincided with an era of discovery of active substances from plants and the movement toward single compound “magic bullet” drugs and away from more holistic health strategies concerning diet, rest, exercise, and importantly—the use of medicinal herbs.

The Takeaway

If there are any lessons to be learned from the two thousand years of scientific and medical advancements highlighted here, perhaps the most critical one is the recognition that even when we humans think we know all there is to know about our health, we really don’t. There are vast amounts of knowledge that await discovery and development. Paradigm shifts take time and tremendous amounts of human ingenuity, careful observation, and persistence.

Yours in health, Dr. Quave

Cassandra L. Quave, Ph.D. is a scientist, author, speaker, podcast host, wife, mother, explorer, and professor at Emory University School of Medicine. She teaches college courses and leads a group of research scientists studying medicinal plants to find new life-saving drugs from nature. She hosts the Foodie Pharmacology podcast and writes the Nature’s Pharmacy newsletter to share the science behind natural medicines. To support her effort, consider a paid or founding subscription, with founding members receiving an autographed 1st edition hardcover copy of her book, The Plant Hunter.

Fascinating review, loved this. I forgot how disrespected Semmelweis‘s ideas were, and his tragic end. So much conflict on the way to progress in any field…. It will be incredible to see where the current genomic, bioengineering, and AI revolutions lead.